A beginner-friendly introduction to compilers: follow along as we build a compiler from scratch, or fork the code on GitHub and add your own optimizations too! In this episode: propagating constant values to eliminate more instructions.

Last time on Compiler Adventures, we wrote an optimization that eliminates no-op instructions like division by one: div x 1. At the end of the post, I challenged you to find other instructions that are always no-ops regardless of the register's value. There are at least two more: "add zero" (e.g. add x 0) and "multiply by one" (e.g. mul x 1) — neither of those examples has any effect on register x regardless of its value.

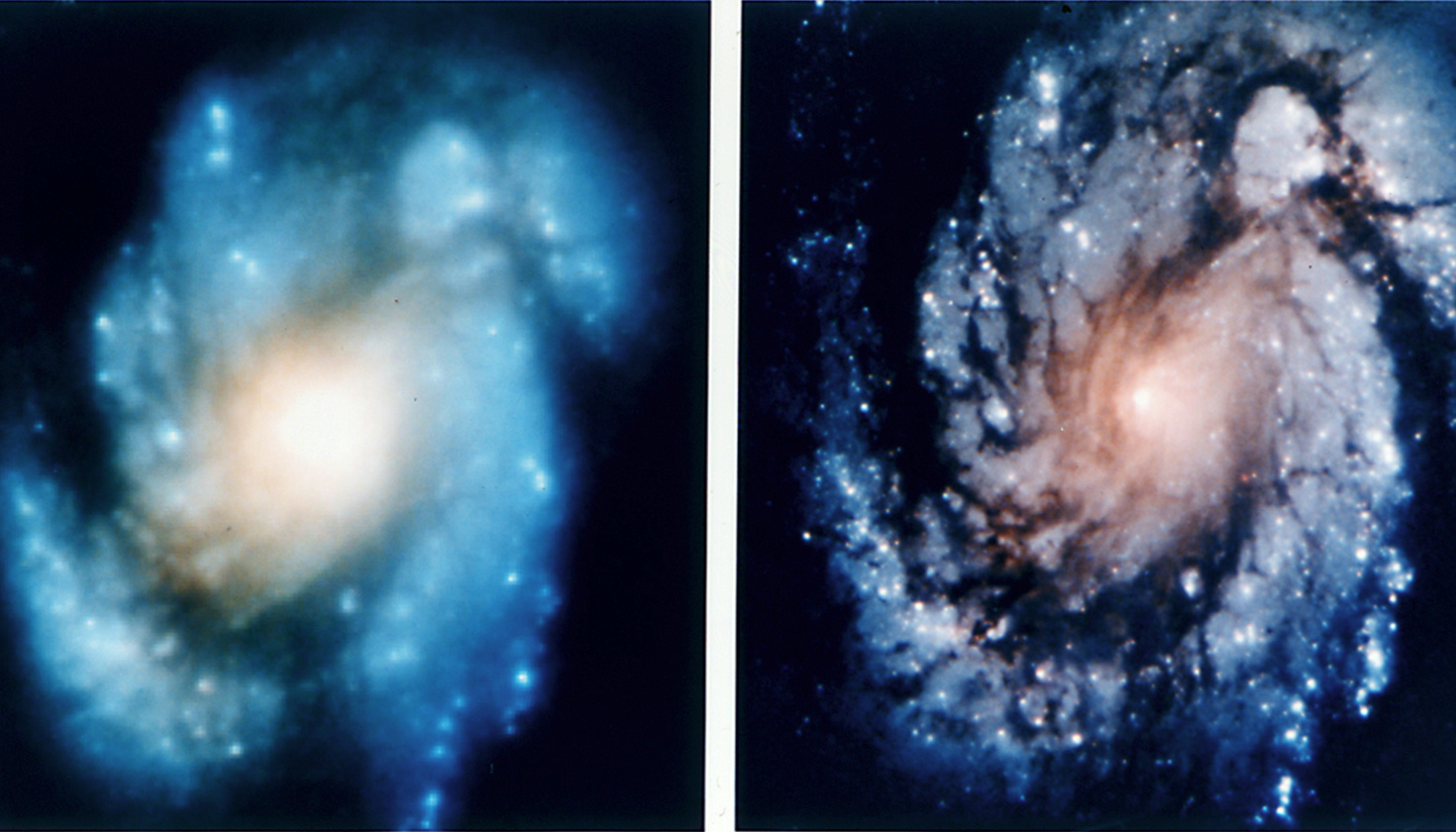

Dramatic improvement in the Hubble Space Telescope's ability to see distant galaxies after corrective optics are installed.

Source:

NASA and ESA, public domain

Dramatic improvement in the Hubble Space Telescope's ability to see distant galaxies after corrective optics are installed.

Source:

NASA and ESA, public domain

In this episode, we'll look at how we can use constants to find more no-op instructions that we can eliminate from our program. The starting code for this episode is on GitHub, on branch part2.

Recall that when a MONAD program starts, the values of w, x, y, z are all zero. Let's look at the start of our MONAD input program and simulate by hand what happens to the four registers:

Wow! Other than reading an input number with inp w, pretty much nothing else happens until that add x 11 instruction. The add x z and mod x 26 instructions have no effect on the registers' values, so we've found more instructions we could optimize out!

In episode 1, our compiler recognized that div z 1 is a no-op because it divides by one. Well, why didn't our compiler also notice that the adjacent instructions are no-ops?

It's because they aren't always no-ops: div z 1 is a no-op regardless of the value of z, but add x z is only a no-op if z is zero. Similarly, the mul x 0 and mod x 26 instructions here are no-ops only because x = 0 before the instruction executes: the operation's result is zero and is written to x, therefore leaving x = 0 unchanged.

The no-ops we've looked at so far are all of the "math operation that had the same result as its input register already held" flavor. There are also other ways in which an instruction might have no effect on the rest of the program. For example, an instruction might calculate a value that is never used, and therefore didn't need to be computed in the first place. We'll explore this idea in detail in an upcoming episode.

In order to optimize out instructions like these, we'll need to teach the compiler to track the possible values registers can have.

Tracking register values

A register's value can either be known exactly, or not known exactly. If a register is known to hold number n, let's call that Exact(n). If the register's value is not known to be any specific number, let's say the register holds Unknown. For our reading and debugging convenience, let's also introduce a third kind of value a register can hold: Input(i), representing the ith input to the program. (For folks not yet comfortable with Rust, quick reminder that usize is the unsigned integer type Rust prefers for counting things.)

optimization.rs. See this code in context in the GitHub repo.

When the program starts, all four registers hold Exact(0). Then, for each instruction, we evaluate a set of rules to determine what the next register state should be. For example, after reading the first program input with the inp w instruction, the w register's value becomes Input(0). Otherwise:

- If the instruction operates on two

Exactvalues, we'll evaluate the operation on those values and record anExactresult. For example,add x 11whenx = Exact(0)would produceExact(11). - If the instruction is a multiplication and one of the operands is

Exact(0), the result isExact(0)regardless of the other operand value. - Otherwise, we aren't able to determine an exact result so we'll record an

Unknowninstead. For example,eql x wbelow is comparing betweenInput(0)andExact(11), and produces anUnknownresult. Similarly, the next instructioneql x 0comparesUnknowntoExact(0), and again produces anUnknownresult.

Applying these rules, we get the following register values starting from the beginning of the program:

Aside: Rust syntax primer

We’re about to use some syntax that may feel unfamiliar to folks not yet used to Rust, so let’s explain it first:

matchis used for pattern-matching in Rust, in the same way that it is used in Python 3.10+ or in functional languages like Scala. It's a handy concept, and I encourage you to learn about it if you haven't encountered it before!if let x = y { <code here> }is shorthand for:match y, and if the patternxmatches, execute the given code block. The Rust book's chapter on it does a great job of explaining its benefits.

Implementing the register value rules

Since inp instructions only take one input instead of two, let's make the code handle them separately. For all other instructions, we can handle them with a function that takes an instruction and two Value inputs, and returns the resulting Value.

optimization.rs. See this code in context in the GitHub repo.

Observant readers might have noticed this code returns Unknown in

some situations where it could have done better.

Cue the foreshadowing music.

We'll improve it further in future episodes.

Finding more no-op instructions

Earlier, we observed that to detect whether add x z is a no-op, we needed to know if x or z are zero — and now we can! In general, we see that:

add x yis a no-op ify = Exact(0). However, it is not a no-op ifx = Exact(0)since in that casexgets overwritten withy's value.mul x yis a no-op ifx = Exact(0)(multiply zero by anything and it stays zero) ory = Exact(1)(multiply anything by one and it doesn’t change). The same no-op rule applies todiv x yas well.- For

mod x y, the MONAD language specifies thatx ≥ 0andy > 0. Therefore,mod x yis a no-op wheneverx < y, since the remainder when dividing a smaller number with a larger one is the smaller number itself. eql x ycan be a no-op in two ways. Ifx = y, we will get the resultx = Exact(1)so we have a no-op ifx = y = Exact(1). Ifx != ythen we’ll get the resultx = Exact(0)so we have a no-op ifx = Exact(0)andyis anyExactvalue exceptExact(0). Soeql x yis a no-op ifx = y = Exact(1)or ifx = Exact(0)andy != Exact(0).- For the forms of these instructions that involve a literal number instead of a second register, such as

add x 11, we simply consider the literal number as its correspondingExactvalue, then apply the same rules as above.

Let’s move forward in two steps. First, let’s write a function that accepts a (non-inp) Instruction and two Value inputs left and right, and returns true if the instruction is a no-op when executed on those values:

optimization.rs. See this code in context in the GitHub repo.

For convenience, I've already defined some helper methods on Instruction that simply return the source/destination register index (the register() function) and the literal or register operand, if the instruction has one (the operand() function).

We can now use is_instruction_no_op to find many more no-op instructions than the remove_div_by_1 function from the last episode could find. Let's update remove_div_by_1 to track registers' values and take advantage of our new functions. It'll still go through the list of instructions, compute how the register values change at each step, and eliminate instructions it discovers are no-ops.

For experienced Rustaceans: this code isn't idiomatic Rust. I'm trying to make it easier to understand for people of any background.

And like that, we've implemented constant propagation! Let's rename remove_div_by_1 to constant_propagation to celebrate our achievement!

Analyzing our optimizations' impact

Let's run our compiler on our Advent of Code input program and measure how well we did:

$ cargo run analyze sample_programs/aoc_challenge.txt

Finished dev [unoptimized + debuginfo] target(s) in 0.02s

Running `.../monad_compiler analyze sample_programs/aoc_challenge.txt`

Original vs optimized length: 252 vs 238 (-14)

Optimized is more efficient by: 5.88%

Adding constant propagation made our no-op detection twice as good as in part 1! We managed to eliminate 7 more instructions compared to last time, for a total improvement of just under 6%.

On the other hand, maybe constant propagation should have had a larger impact? Let's visualize our optimizations to figure out what's going on.

We’ll use cargo run registers <monad_program> to generate a printout of the register values our optimizations determined at every instruction in the program, together with an indication whether that instruction was detected to be a no-op. I’ll include a few relevant snippets of the input program’s printout here — the full printout is available on GitHub.

The beginning of the program starts looking pretty good: in the first 10 instructions, most register values are Exact, and constant propagation helps eliminate 5 of these 10 instructions as no-ops. That’s a lot better than 6%!

Unfortunately, just a bit farther down, the simulated register values quickly become a sea of Unknown, and few if any no-ops are found:

Compiler optimizations use insight about the program's behavior to make it more efficient. When the program starts, its initial state is fully known: all registers have Exact(0) values, and the only unknowns are values derived from input data read by inp instructions. It's an insight-rich environment and our compiler performs nicely!

Unfortunately, our compiler isn't yet sophisticated enough to continue generating insight about the rest of the program, so its performance drops accordingly. The statistics at the end of the printout summarize the situation: 91.6% of the time, at least one of the values used by the instruction was unknown, and 22.3% of the time, both values were unknown. With so few known values to work with, constant propagation unsurprisingly has a limited effect.

Total non-input instructions: 238

- with 1+ non-exact value: 218 (91.6%)

- without any exact values: 53 (22.3%)

Shining a light on the patterns hiding within those Unknown values will be the focus of upcoming Compiler Adventures.

Wrap up + challenge question

Implementing one compiler optimization can open up new opportunities for another optimization to work its magic. We already included part 1's "eliminate no-ops" idea in this episode's constant propagation code. In the next Compiler Adventure, we'll use constant propagation as part of a fascinating new optimization that will become the foundation of most of our future work!

The completed code from this post is on branch part2_finished on GitHub. For a sneak peek of how we can improve our Unknown handling, consider the following program:

inp w

add x w

eql w x

Right now, our compiler says that last eql w x instruction would evaluate to Unknown:

Look carefully at the register values around add x w and eql w x. Can you figure out what the eql w x evaluates to? Is it really Unknown? Why isn't our current constant_propagation() function able to do better?

All this and more in the next Compiler Adventure — see you next time!

If you liked this post, please share it with friends — compilers are for everyone! Reach out to me on Twitter or join the discussion on HackerNews.

Thanks to Hillel Wayne, Russell Cohen, Jonathan Paulson, Jimmy Koppel, Aaron Brooks, Vincent Tjeng, James Logan, and Akshat Bubna for their feedback on drafts of this post. Any mistakes are mine alone.